Research & Development

R&D Overview

At the heart of VERSES: Active Inference

We are investigating this hypothesis using Active Inference, an approach to modeling intelligence based on the physics of information. With Active Inference, we can design and create software programs or agents capable of making decisions and taking real-time actions based on incoming data. These agents update their beliefs in real-time as they receive new data and use their understanding of the world to plan ahead and act appropriately within it.

Our approach allows us explicit access to the beliefs of agents. That is, it gives us tools for discovering what an agent believes about the world and how it would change its beliefs in light of new information. Our models are fundamentally Bayesian and thus tell us what an optimal agent should believe and how it should act to maintain a safe and stable environment.

Our goal is to leverage Active Inference to develop safe and sustainable AI systems at scale:

Opening the black box. Equip agents with interpretable models constructed from explicit labels, thus making our agents’ decisions auditable by design.

Hybrid automated and expert systems. Active inference affords an explicit methodology for incorporating existing knowledge into AI systems and encourages structured explanations.

Optimal reasoning. Bayesian systems optimally combine what agents already know, i.e., prior knowledge, with what they learn from newly observed data to reason optimally in the face of uncertainty.

Optimal planning. We implement the control of actions using Bayesian reasoning and decision-making, allowing for optimal action selection in the presence of risk and uncertainty.

Optimal model selection. Bayesian active inference can automatically select the best model and identify “the right tool for the right job.”

Explanatory power and parsimony. Instead of maximizing reward, active inference agents resolve uncertainty, balancing exploration and exploitation.

Intrinsic safety. Built on the principles of alignment, ethics, empathy, and inclusivity, agents maximize safety at all levels.

Achieving alignment versus maximizing reward: The homeostatic objective

Active Inference provides a recipe for objective function selection based upon physics and information theory. Active Inference agents do not merely seek to maximize a reward function. Instead, Active Inference automatically balances exploration and exploitation, allowing for the design of curious, information-seeking agents. Crucially, Active Inference naturally extends to multi-agent systems that share information and work together to create sustainable ecosystems. This inherently promotes safer interactions: agents understand and model each other and identify with entities around them, forming a collective intelligence.

Addressing the tractability of Bayesian AI

Current thinking in most state-of-the-art machine learning research is that a purely Bayesian approach to machine intelligence, while widely accepted as theoretically the best approach, cannot be scaled due to computational complexities. This belief rests on the unstated assumption that sophisticated Bayesian models must mimic the structure of modern deep belief networks and employ time- and energy-consuming gradient-descent-based methods for learning. We are overcoming these issues by adopting a more brain-like modular approach to neural network architecture that enables fast, gradient-free learning.

Addressing this has emerged as one of our core research areas. We are developing a new industry-optimized, scalable Bayesian Inference framework that we hope will do for Bayesian machine intelligence what autograd did for deep learning.

Our initial results are promising, and to showcase our technology to the wider machine learning community, we will demonstrate our technology against state-of-the-art AI on benchmarks such as the Atari 100k benchmark challenge. As we continue to develop this technology, we will disseminate our findings in peer-reviewed journals and conferences. Stay tuned.

Safety & Governance

We believe that any safe AI requires built-in systems of governance. It cannot be an afterthought.

Due to their explainability and intrinsic safety, Active Inference-based agents lend themselves to proper frameworks for governance. We have been working on formulating these governance systems as a set of open standards along with the IEEE. For more information, see the related content on our AI Governance page.

Our Research

The VERSES Research and Development team comprises some of the world’s leading experts in diverse disciplines such as computational neuroscience, computer science and machine learning, engineering, social science, and philosophy.

View our research team’s Google Scholar profile.

Research Roadmap

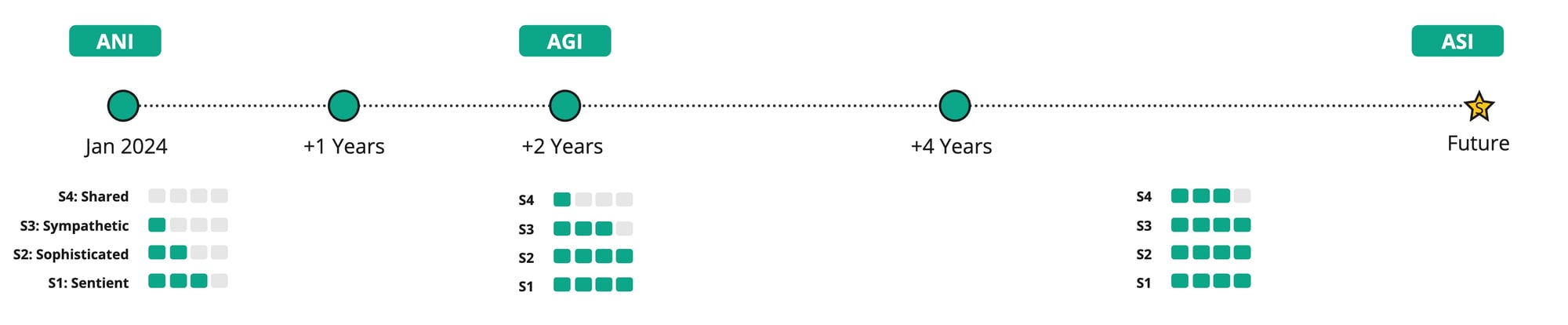

The development of AI is often presented as a staged progression from so-called “Artificial Narrow Intelligence” (ANI)—systems that are able to solve problems within a narrowly defined domain—to progressively more powerful and adaptable systems able to solve problems in a more domain-general manner: so-called “Artificial General Intelligence” (AGI). Beyond that, artificial systems might even be designed that surpass general human cognitive abilities: “Artificial Super Intelligence” (ASI).

Our approach focuses rather on designing a diversity of Intelligent Agents: software programs capable of planning, making decisions, and acting based on real-time updates to their models of the world, informed by streaming sensory data. The collective intelligence of such agents, interacting sustainably with one another and with human beings, is Shared Intelligence (S4 on our roadmap), which is our north star and might be regarded as a version of ASI.

Working backward, achieving this goal requires the design of agents capable of taking human perspectives and thus of acting considerately toward human beings: Sympathetic Intelligence (S3). In turn, this perspective-taking ability requires the capacity to reason about counterfactual situations and possible futures: Sophisticated Intelligence (S2). Sophisticated intelligence is a more powerful version of Sentient Intelligence (S1), the most basic implementation of active inference, which at a minimum involves beliefs about actions and their sensory consequences.

Active Inference Development Stages

S0: Systemic Intelligence

This is contemporary state-of-the-art AI; namely, universal function approximation—mapping from input or sensory states to outputs or action states— that optimizes some well-defined value function or cost of (systemic) states. Examples include deep learning, Bayesian reinforcement learning, etc.

S1: Sentient Intelligence

Sentient behavior or active inference based on belief updating and propagation (i.e., optimizing beliefs about states as opposed to states per se); where “sentient” means behavior that looks as if it is driven by beliefs about the sensory consequences of action. This entails planning as inference; namely, inferring courses of action that maximize expected information gain and expected value, where value is part of a generative (i.e., world) model; namely, prior preferences. This kind of intelligence is both information-seeking and preference-seeking. It is quintessentially curious, in virtue of being driven by uncertainty minimization, as opposed to reward maximization.

S2: Sophisticated Intelligence

Sentient behavior—as defined under S1—in which plans are predicated on the consequences of action for beliefs about states of the world, as opposed to states per se. i.e., a move from “what will happen if I do this?” to “what will I believe or know if I do this?”. This kind of inference generally uses generative models with discrete states that “carve nature at its joints”; namely, inference over coarse-grained representations and ensuing world models. This kind of intelligence is amenable to formulation in terms of modal logic, quantum computation, and category theory. This stage corresponds to “artificial general intelligence” in the popular narrative about the progress of AI.

S3: Sympathetic Intelligence

The deployment of sophisticated AI to recognize the nature and dispositions of users and other AI and—in consequence—recognize (and instantiate) attentional and dispositional states of self; namely, a kind of minimal selfhood (which entails generative models equipped with the capacity for Theory of Mind). This kind of intelligence is able to take the perspective of its users and interaction partners—it is perspectival, in the robust sense of being able to engage in dyadic and shared perspective taking.

S4: Shared Intelligence

The kind of collective that emerges from the coordination of Sympathetic Intelligences (as defined in S3) and their interaction partners or users—which may include naturally occurring intelligences such as ourselves, but also other sapient artifacts. This stage corresponds, roughly speaking, to “artificial super-intelligence” in the popular narrative about the progress of AI—with the important distinction that we believe that such intelligence will emerge from dense interactions between agents networked into a hyper-spatial web. We believe that the approach that we have outlined here is the most likely route toward this kind of hypothetical, planetary-scale, distributed super-intelligence.

Stages of Intelligence

Research

.jpg?width=2000&height=495&name=Intelligence%20timeline%20(2).jpg)